Over the past year, the healthcare industry has been flooded with demos of AI agents and it has never been easier to create them. In a weekend, a team can select a model, connect and wrap it to a UI, script flows, and generate something that looks magical & capable (like a healthcare assistant). But the distance between a polished demo and a production-ready agent that operates safely inside a health system is measured in years, not days.

But beneath the excitement lies a quieter reality, one that only becomes visible when you try to deploy an agent in a real health system.

Building a healthcare AI agent is like exploring an iceberg.

Above the waterline, everything looks simple.The real complexity sits out of sight, below the surface is where the real work lives.

Most teams don’t discover this until they move beyond proof of concept and confront the realities of clinical data, workflows, governance, and scale. This blog unpacks that hidden work and complexity. Not to discourage innovation, but to give health care leaders and engineers a perspective of what it takes to build and scale safe, reliable AI agents. And why so many organizations that start with “let’s build it ourselves” later rethink that path.

The Surface: Why AI Agents Look & Feel Like Magic

The illusion and promise of simplicity feels like magic, but after you've seen one, or five, or a dozen demos, an obvious pattern emerges:

Most healthcare agent demos include the same thing:

- A conversational interface

- A model generating fluent responses

- A handful of scripted workflows that “seem” connected to healthcare data

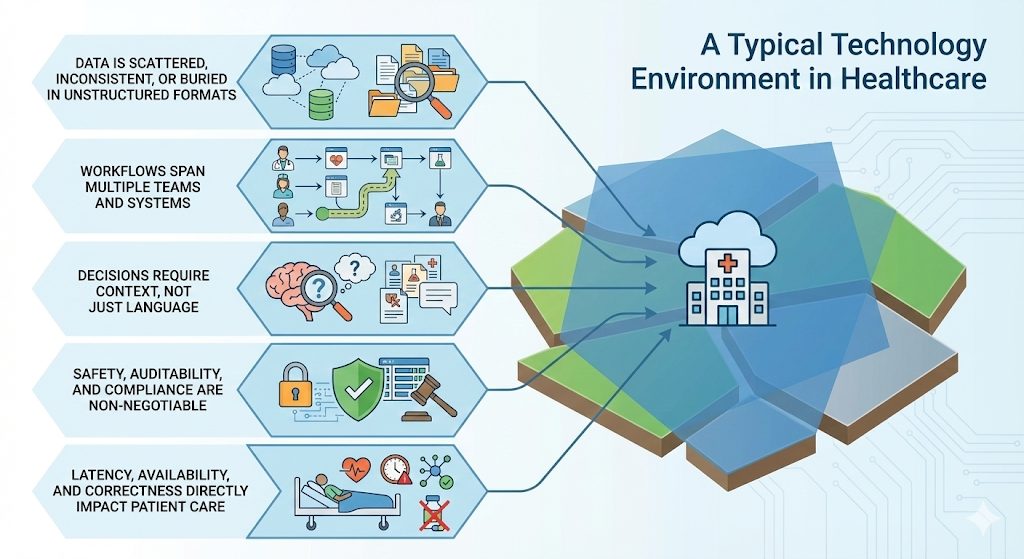

From the surface, the remaining work should be straightforward, just a matter of adding more workflows, more data, more guardrails. But healthcare is not a typical software environment. It is fragmented, idiosyncratic, and deeply dependent on context and rules that change by location, provider, patient type, visit type, and payer.

The reality: healthcare is different and you can’t bolt a generic AI agent onto healthcare.

And agents built without that consideration of healthcare break the moment they encounter the real-world complexity.

Below the Surface: What It Really Takes to Build a Healthcare AI Agent

To build a safe, reliable, action-taking healthcare agent, organizations must solve all of the following not as features, but as foundational capabilities. A healthcare-ready agent requires accounting for an entire ecosystem of challenges that emerge when an agent needs to reason or take action inside a health system or ambulatory practice.

Here are the invisible ecosystem of challenges that teams inevitably face:

Understanding Context, Not Just Language

Healthcare AI agents must do more than respond; they must interpret and explain. Patients have histories, risk factors, medication interactions, recent admissions, and scheduling constraints. Staff conversations require accuracy and timeliness. Agents must interpret these signals in real time, and reason over them safely.

This demands:

- Access to longitudinal, normalized data

- Context-aware retrieval

- Clinical logic and guardrails

- Deterministic next-best-action planning

- And so much more clinical contextual knowledge that sits inside a head of the clinical and operational team

Without contextual grounding, agents sound smart but make dangerous mistakes.

Taking Safe, Clinically Aware Action

Healthcare tasks aren’t one-step API calls. They are stateful processes: referrals, follow-ups, outreach sequences, task routing, pre-visit summaries, or multi-step scheduling involving insurance, visit types, and locations.

An operational agent must support:

- Long-running workflows

- Controlled retries

- State transitions

- Error handling

- Auditability

Most agent frameworks break the moment a workflow lasts longer than a conversation.

Making Sense of Healthcare Data. In All Its Forms

Clinical data is inconsistent, multi-modal, and often unstructured. Healthcare is full of:

- PDFs, faxes, images, CCDAs

- HL7 v2 messages generated differently at every organization

- Local terminologies and free-text idiosyncrasies

- Claims feeds

- Missing fields, inconsistent codes, partial documents

Before an agent can act, the data must be:

- Ingested

- Standardized

- Cleaned

- Labeled

- Governed

- Made safely consumable

This is the largest hidden cost and most underestimated part of the entire problem.

Connecting to Systems That Don’t Want to Talk

Interoperability is not “plumbing”; it’s table-stakes. EHRs differ drastically in structure, access patterns, data quality, and reliability. Many require costly, long-lead-time integrations or specialized operators.

Real-world agent building requires:

- Multi-method EHR connectivity (APIs, HL7, UI automation, document parsing)

- Real-time event handling

- Write backs

- Cost-efficient extraction patterns

- Resilience to outages or rate limits

- Support for national networks like Surescripts and major labs

- Handling long-tail EMRs in fragmented markets

Without this, you end up with agents that “look” connected but fail when real data arrives. You need agents to be grounded to your organizational data.

Operating at Enterprise Scale

Healthcare organizations require agents that meet enterprise-grade standards such as:

- Attribute-based access control

- Compliance-embedded operations

- Full observability

- Real-time logs and audit trails

- Zero-trust data governance

- Support for flexible cloud-native and hybrid deployments

Every AI decision must be explainable, logged, permissioned, and auditable.

Empowering Operations Teams to Operate AI Safely

A production agent is not a set-it-and-forget-it system, it must be configurable and not hardcoded. Operational teams need:

- Monitoring: Visibility into behavior

- Configuration: Controls over workflows and actions

- Visibility into agent behavior: Validation, overrides, pause/resume

- Safety mechanisms: Safe ways to adjust or extend capabilities

This is the difference between a prototype and a product that can securely and reliably scale.

Providing a Safe, Governed Environment for Developers

Even with a strong operations team, developers need:

- Queryable clean data

- Consumable, governed APIs

- High-level query interfaces

- Consistent data models

- Sandboxes

- Documentation

Otherwise, customization becomes brittle, slow, and risky.

Why This Matters

The real reason so many organizations rethink “build vs. buy”, is not because they don’t have the capital or resources; rather, building all of this requires years, not months. It’s not just building the agent. It’s owning the entire operational surface that makes the agent reliable.

This is why we built XCaliber:

To let health systems focus on what they want to build: AI that improves care, without spending years building what they must build first.

Merlin, our voice-enabled, EHR-integrated healthcare assistant, is simply the most visible expression of that philosophy:

Autonomous real-time decision-making and automation, and governed intelligence delivered safely inside healthcare workflows.

Our team has spent more than 20 years across Google Health, Athenahealth, academic medical centers, and digital-health infrastructure, and the last 3+ years building the XCaliber Platform, the foundation that powers Merlin.

This perspective comes not from theory, but from years of working in the friction points of healthcare data, automation, and operational reality.

We built the platform to unify the capabilities required for safe, real-time healthcare AI:

- Contextual intelligence

- Reliable and safe workflow automation

- High-fidelity healthcare data products

- Interoperability and integrations

- Real-time decision-making

- Enterprise governance

- Developer extensibility

A healthcare AI agent succeeds or fails on the invisible work: the context, data intelligence, interoperability, orchestration, governance, and developer experience. The future of healthcare automation and real-time decision making will be determined by who can safely handle the complexity beneath the surface.

.png)